jmtd → Jonathan Dowland's Weblog

Below are the five most recent posts in my weblog. You can also see a chronological list of all posts, dating back to 1999.

I've been enjoying Biosphere as the soundtrack to my recent "concentrated work" spells.

I remember seeing their name on playlists of yester-year: axioms, bluemars1, and (still a going concern) soma.fm's drone zone.

- Bluemars lives on, at echoes of bluemars↩

I recently became a maintainer of/committer to IkiWiki, the software that powers my site. I also took over maintenance of the Debian package. Last week I cut a new upstream point release, 3.20200202.4, and a corresponding Debian package upload, consisting only of a handful of low-hanging-fruit patches from other people, largely to exercise both processes.

I've been discussing IkiWiki's maintenance situation with some other users for a couple of years now. I've also weighed up the pros and cons of moving to a different static-site-generator (a term that describes what IkiWiki is, but was actually coined more recently). It turns out IkiWiki is exceptionally flexible and powerful: I estimate the cost of moving to something modern(er) and fashionable such as Jekyll, Hugo or Hakyll as unreasonably high, in part because they are surprisingly rigid and inflexible in some key places.

Like most mature software, IkiWiki has a bug backlog. Over the past couple of

weeks, as a sort-of "palate cleanser" around work pieces, I've tried to triage

one IkiWiki bug per day: either upstream or in

the Debian Bug

Tracker.

This is a really lightweight task: it can be as simple as "find a bug reported in

Debian, copy it upstream, tag it upstream, mark it forwarded; perhaps taking

5-10 minutes.

Often I'll stumble across something that has already been fixed but not recorded as such as I go.

Despite this minimal level of work, I'm quite satisfied with the cumulative progress. It's notable to me how much my perspective has shifted by becoming a maintainer: I'm considering everything through a different lens to that of being just one user.

Eventually I will put some time aside to scratch some of my own itches (html5 by

default; support dark mode; duckduckgo plugin; use the details tag...) but for

now this minimal exercise is of broader use.

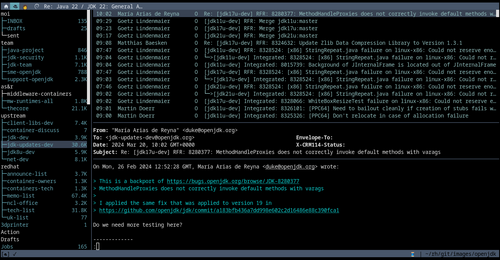

I started looking at aerc, a new Terminal mail client, in around 2019. At that time it was promising, but ultimately not ready yet for me, so I put it away and went back to neomutt which I have been using (in one form or another) all century.

These days, I use neomutt as an IMAP client which is perhaps what it's worst

at: prior to that, and in common with most users (I think), I used it to read

local mail, either fetched via offlineimap or

directly on my mail server. I switched to using it as a (slow, blocking) IMAP

client because I got sick of maintaining offlineimap (or

mbsync), and I started to use neomutt to

read my work mail, which was too large (and rate limited) for local

syncing.

This year I noticed that aerc had a new maintainer who was presenting about

it at FOSDEM, so I thought I'd take another look. It's

come a long way: far enough to actually displace neomutt for my day-to-day

mail use. In particular, it's a much better IMAP client.

I still reach for neomutt for some tasks, but I'm now using aerc for most

things.

aerc is available in Debian, but I recommending building from upstream source

at the moment as the project is quite fast-moving.

How can I not have done one of these for Propaganda already?

Propaganda/A Secret Wish is criminally underrated. There seem to be a zillion variants of each track, which keeps completionists busy. Of the variants of Jewel/Duel/etc., I'm fond of the 03:10, almost instrumental mix of Jewel; preferring the lyrics to be exclusive to the more radio friendly Duel (04:42); I don't need them conflating (Jewel 06:21); but there are further depths I've yet to explore (Do Well cassette mix, the 20:07 The First Cut / Duel / Jewel (Cut Rough)/ Wonder / Bejewelled mega-mix...)

I recently watched The Fall of the House of Usher which I think has Poe lodged in my brain, which is how this album popped back into my conciousness this morning, with the opening lines of Dream within a Dream.

But are they Goth?

I got a new work laptop this year: A Thinkpad X1 Carbon (Gen 11). It wasn't the one I wanted: I'd ordered an X1 Nano, which had a footprint very reminiscent to me of my beloved x40.

Never mind! The Carbon is lovely. Despite ostensibly the same size as the T470s it's replacing, it's significantly more portable, and more capable. The two USB-A ports, as well as the full-size HDMI port, are welcome and useful (over the Nano).

I used to keep notes on setting up Linux on different types of hardware, but I haven't really bothered now for years. Things Just Work. That's good!

My old machine naming schemes are stretched beyond breaking point (and

I've re-used my favourite hostname, qusp, one too many times) so I went for

something new this time: Riffing on Carbon, I settled (for now) on carbyne, a

carbon allotrope which

is of interest to nanotechnologists (Seems appropriate)

Older posts are available on the all posts page.